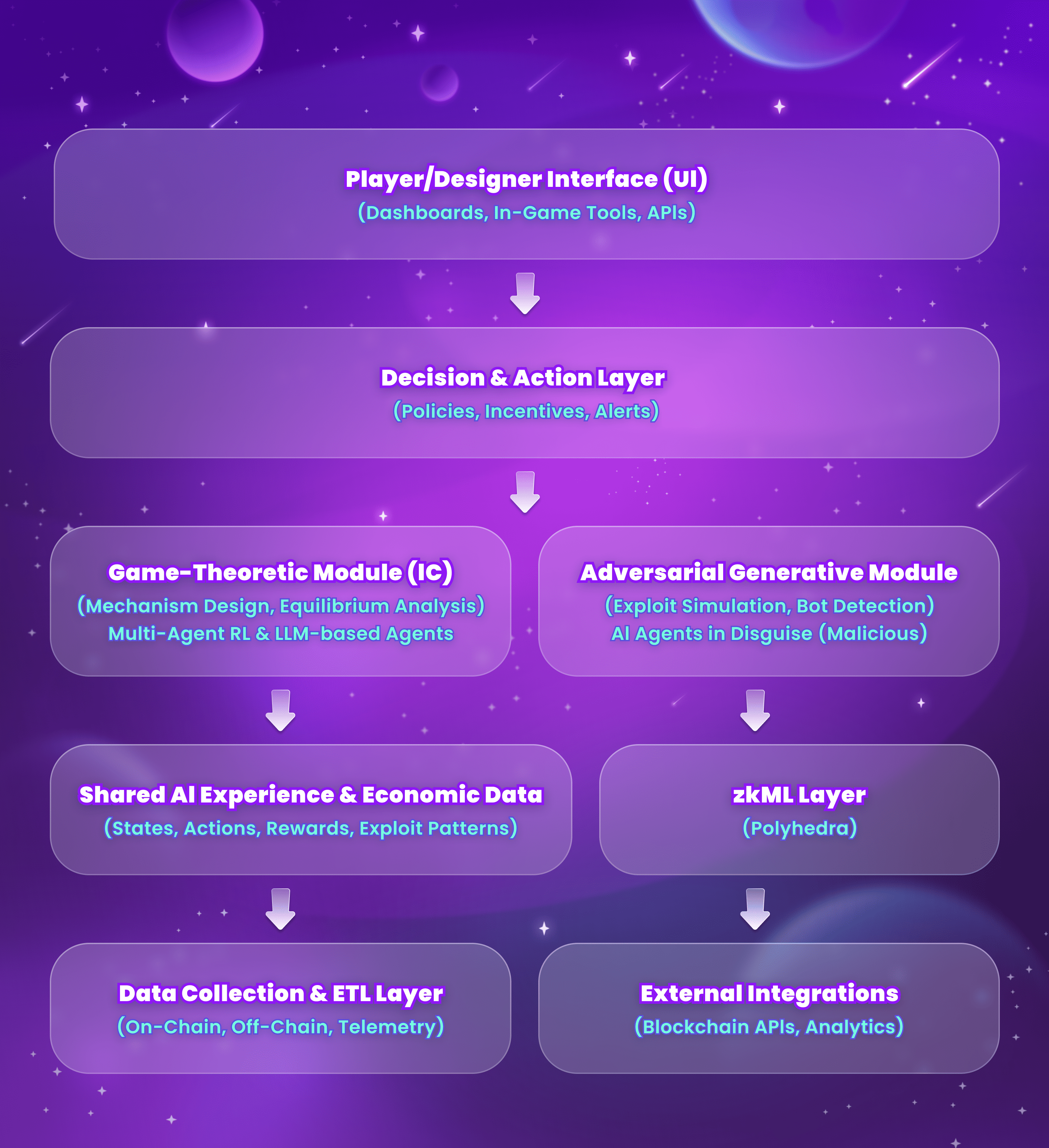

4. Verifiable AI Game Framework: System Design

AIdea’s Verifiable AI Game Framework unifies adaptive AI modules, robust mechanism design, and privacy-preserving zero-knowledge proofs. By applying adversarial modeling, multi-agent reinforcement learning (RL), and zkML technology from Polyhedra, AIdea ensures that critical operations—such as reward distribution or cheat detection—are provably correct while keeping sensitive data confidential.

Core Technology Modules

-

Player/Designer Interface (UI). This top-level interface provides dashboards, in-game tools, and APIs that both players and designers use to interact with the system. Players monitor their rewards, perform strategic actions, and manage in-game assets, while designers adjust tokenomics, configure anti-cheat policies, and oversee the overall game environment. Through real-time insights and analytics, the UI ensures stakeholders remain informed of system updates, gameplay adjustments, and economic changes, seamlessly connecting users to the Decision & Action Layer.

-

Decision & Action Layer. Functioning as the coordinator, this layer translates AI outputs into operational changes within the live game ecosystem. It applies recommended policy shifts—such as modifying token emission rates, implementing quest difficulty tweaks, or setting user privileges—and issues timely alerts to relevant parties. By consistently deploying new measures, the Decision & Action Layer maintains an up-to-date environment that aligns with the platform’s incentive-compatible rules, preventing stagnation and reinforcing fair play.

-

Game-Theoretic Module (IC Mechanisms). Rooted in mechanism design and equilibrium analysis, this module ensures rational participants find cooperation and fair play more profitable than cheating. By simulating cooperative, neutral, and adversarial player strategies through multi-agent RL and LLM-based analysis, the module identifies reward structures that uphold self-enforcing fairness. As a result, it anticipates imbalances before they surface, preserving stable game economies and guiding the ecosystem toward outcomes that benefit both individual participants and the broader community.

-

Adversarial Generative Module. Targeting the detection of malicious or fraudulent activities, this component employs AI-driven, disguised adversaries and exploit simulations. Methods like Generative Adversarial Networks (GANs) generate potential cheating scenarios—ranging from botting patterns to Sybil-style multi-account strategies—while the system concurrently learns to recognize and neutralize them. By identifying vulnerabilities proactively and prompting real-time policy refinements, this module cuts down on crisis-level developer interventions and maintains an equitable game environment.

-

Shared AI Experience & Economic Data. Acting as the central repository, this layer aggregates action logs, user states, resource flows, and flagged exploit instances from across the framework. All AI modules read from and write to this common data source, ensuring synchronized insights and a unified perspective on user behavior, token dynamics, and marketplace shifts. By simplifying analysis and auditability, it also enables designers to track historical trends, study the effects of recent updates, and validate system integrity.

-

zkML Layer (Polyhedra). Incorporating Polyhedra’s zkML technology and leveraging Expander—the globally recognized high-speed proof system—this layer empowers AIdea to confirm AI decisions on-chain without exposing internal logic or user data. By generating zero-knowledge proofs for reward allocations, anti-cheat results, or other key outputs, the system guarantees correctness while preserving privacy. Any attempt to tamper with these results fails on-chain verification, creating a transparent, tamper-resistant foundation that reinforces trust among all participants.

-

Data Collection & ETL Layer. Designed to unify the diverse flow of information from on-chain transactions, off-chain server telemetry, and user-generated inputs, this layer converts raw data into a standardized format. On-chain records—like wallet activity and NFT event logs—integrate with user session metrics and chat transcripts, ensuring that both economic analytics and gameplay adjustments have a comprehensive, real-time view of the state of the system. This refined dataset fuels the AI engine’s learning and decision-making.

-

External Integrations. As a final facet of AIdea’s architecture, these connections link the platform to various blockchain APIs, analytics services, and partner tools. Smart contracts manage proof validation on-chain, while analytics platforms monitor in-game token flows, user retention, and security incidents in real time. Partnerships can include everything from guild-based collaborations to NFT marketplaces, all of which benefit from AIdea’s verifiable AI and consistent, adaptive rule enforcement.

AIdea’s AI Game Framework represents a groundbreaking fusion of advanced AI, game-theoretic design, and cutting-edge privacy-preserving technologies. Its architecture is built on three key principles: fairness, adaptability, and verifiability. By integrating multi-agent reinforcement learning, adversarial modeling, and mechanism design, the framework ensures that the game’s economy remains balanced and self-enforcing. Players are incentivized to engage honestly because the rules make rational, legitimate behavior the most rewarding path, while exploitative strategies are systematically discouraged.

A key technological strength of the framework is its modularity, allowing real-time adaptability. The Decision & Action Layer continuously refines tokenomics, quest structures, and gameplay rules based on player activity and market conditions. The Game-Theoretic and Adversarial Generative Modules proactively address potential vulnerabilities, enabling the system to preemptively neutralize cheating and economic disruptions. The centralized Shared AI Data ensures all modules operate on synchronized insights, enhancing the accuracy and efficiency of AI decision-making.

The integration of zkML through Polyhedra’s Expander-based proof system elevates the framework by adding a verifiable layer of trust. This ensures that critical operations—such as reward allocation and exploit detection—are provably correct while maintaining user privacy and protecting proprietary model details. This innovation transforms AIdea into a tamper-resistant system where trust is mathematically guaranteed rather than assumed.

Together, these components make AIdea a robust, scalable, and transparent solution for modern digital economies. Its design not only addresses the complex challenges of tokenized gaming but also sets a new standard for fairness, security, and sustainability in decentralized ecosystems. By harmonizing advanced AI with verifiable outcomes, AIdea empowers both players and game designers to thrive in a digital future that is truly equitable and innovative.